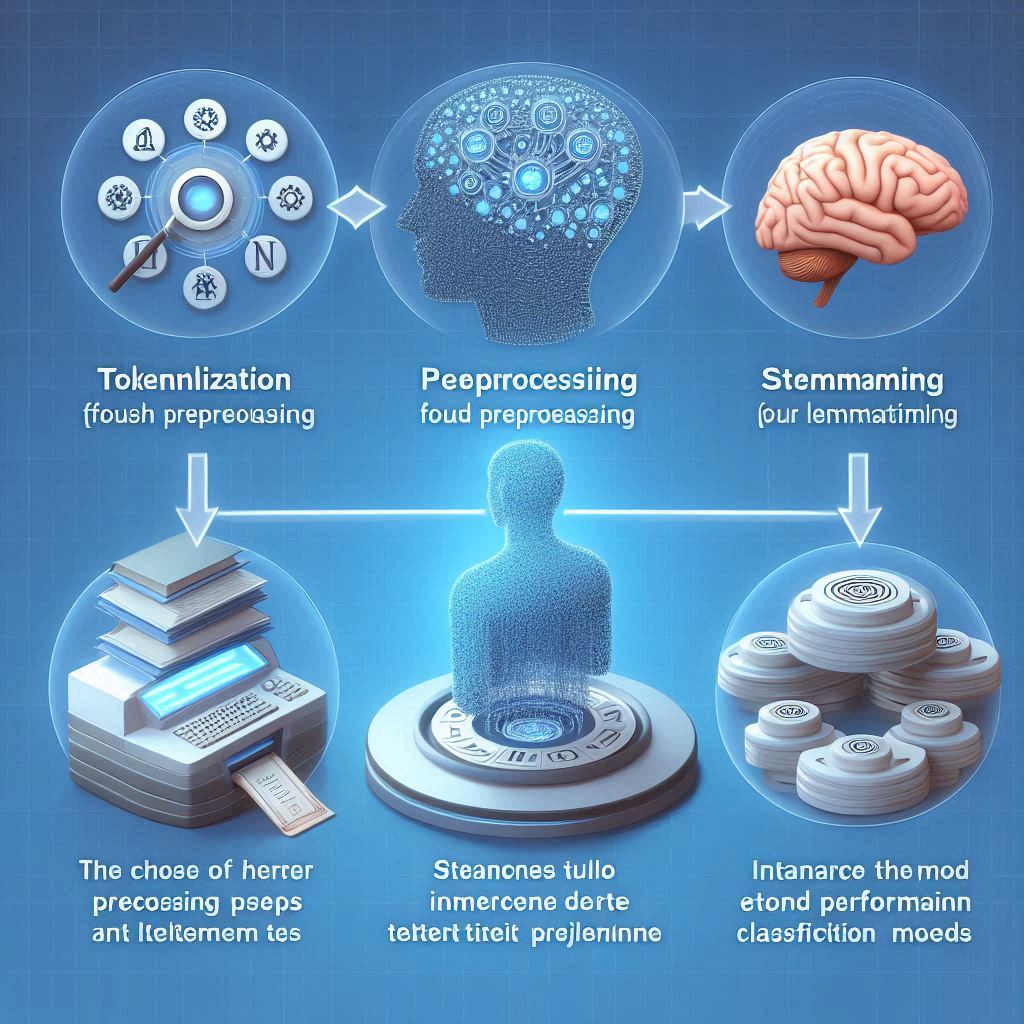

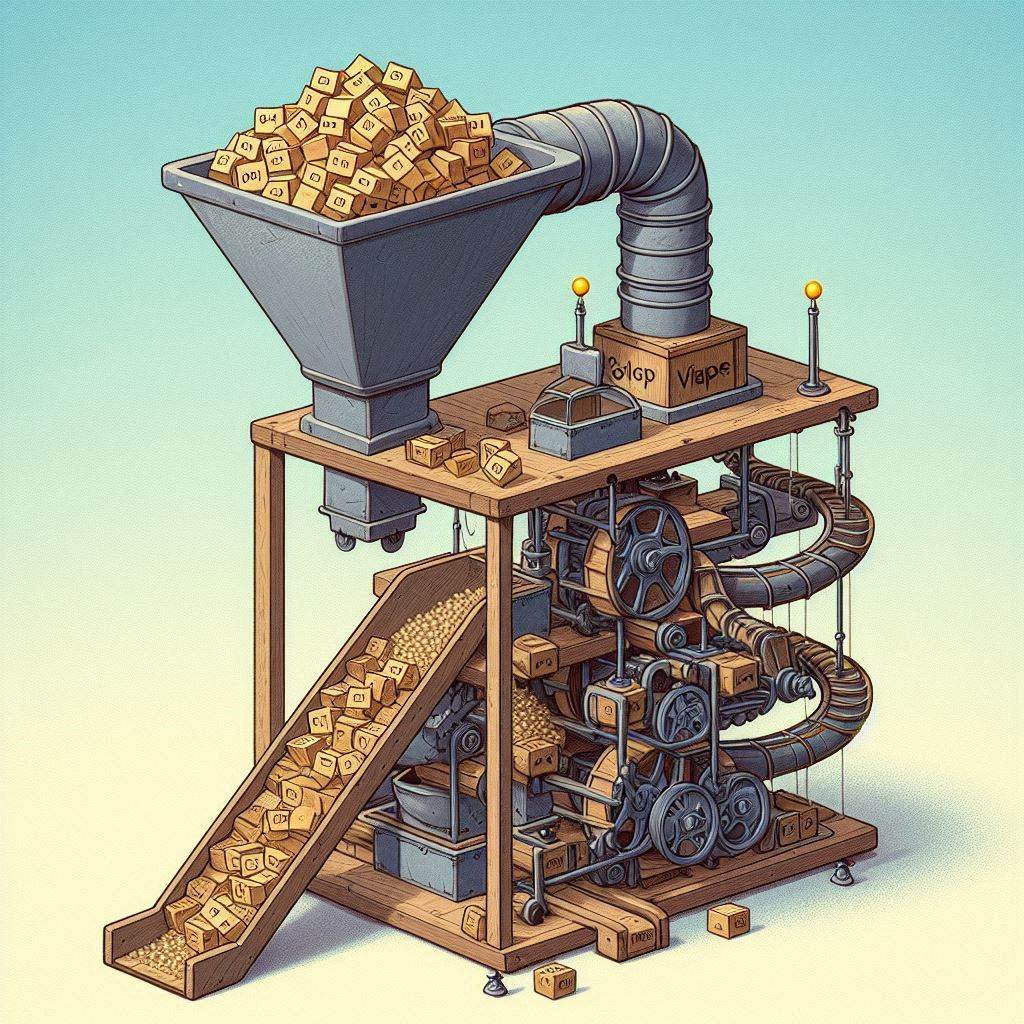

In recent years, the field of Natural Language Processing has been revolutionized by the Bidirectional Encoder Representations from Transformers (BERT) model developed by Google in 2018. BERT utilizes a Transformer architecture to process text bidirectionally, taking into account the context before and after a given word. This allows BERT to gain a deeper understanding of language and produce better results for NLP tasks. As a result, BERT has become a powerful tool in the AI and Machine Learning industries, and it continues to shape the landscape of Natural Language Processing.

Retail Price: $499.00

You Save: $450.00

Key Takeaways from Highlights Videos:

• BERT is a model for Natural Language Processing developed by Google in 2018

• BERT utilizes a Transformer architecture to process text bidirectionally

• BERT can gain a deeper understanding of language and produce better results for NLP tasks

• BERT is a powerful tool in the AI and Machine Learning industries

Daily Bidirectional Encoder Representations Summary:

Bidirectional Encoder Representations from Transformers (BERT) is a model for Natural Language Processing developed by Google in 2018. It utilizes a Transformer architecture to process text bidirectionally, taking into account the context before and after a given word. This allows BERT to gain a deeper understanding of language and produce better results for NLP tasks. BERT is a powerful tool in the AI and Machine Learning industries, and it continues to shape the landscape of Natural Language Processing. Scroll down to view the highlighted videos from the past 24 hours to learn more.

Fri Apr 28 2023 9:23:01 UTC

Weitere Informationen unter: https://gpt5.blog/bert-bidirectional-encoder-representations-from-transformers/

BERT steht für “Bidirectional Encoder Representations from Transformers” und ist ein Modell für die natürliche Sprachverarbeitung, das von Google im Jahr 2018 entwickelt wurde. Es nutzt die Transformer-Architektur und verarbeitet Texte bidirektional, indem es sowohl den Kontext vor als auch nach einem Wort berücksichtigt. Dies ermöglicht BERT, ein tieferes Verständnis von Sprache zu erlangen und bessere Ergebnisse bei NLP-Aufgaben zu erzielen. Es wurde ein revolutionäres Modell in der KI- und maschinellen Lernbranche und hat die Landschaft der natürlichen Sprachverarbeitung maßgeblich geprägt.

Mit freundlichen Grüßen von GPT-5 (https://gpt5.blog) Powered by J.O. Schneppat

#bert #bidirectional #encoder #representations #transformers #natürlichesprachverarbeitung #google #2018 #kuenstlicheintelligenz #maschinelleslernen #transformerarchitektur #vaswani #selbstattention #bidirektional #kontext #nlp #aufgaben #unidirektional #encoderstruktur #selbstattention #positionalencoding #pretraining #finetuning #textklassifikation #sentimentanalyse #suchmaschinenoptimierung #leistungsfähigkeit #zukunftsentwicklungen #verbesserung #leistungsfähigeresmodelle #anwendungsbereiche #interagieren #computern #grundlegendeveränderung

Sun Feb 26 2023 0:43:39 UTC

BERT bert-base-uncased’ model in 4 lines ———————————————————————————————————— !pip install transformers

———————————————————————————————————— from transformers import pipeline unmasker = pipeline(‘fill-mask’, model=’bert-base-uncased’) unmasker(“Artificial Intelligence [MASK] take over the world.”)

————————————————————————————————————

Full code: https://github.com/vinayakkankanwadi/bert

Sun Sep 4 2022 17:51:09 UTC

Natural Language Processing

https://github.com/b1nch3f/Deep-learning-from-ground-up-with-Tensorflow/tree/main/14%20Natural%20Language%20Processing:%20Pretraining

Tue Nov 30 2021 14:31:08 UTC

Video ini merupakan perbaikan dari video [30] dimana saya belajar tentang cara menggunakan algoritme Bidirectional Encoder Representations from Transformers (BERT) untuk melakukan ekstraksi fitur pada suatu kalimat.

Keperluan percobaan dapat diakses pada git saya di: https://github.com/heriistantoo/bert-ekstraksi

Atau pada github saya di: https://github.com/heriistantoo/bert-ekstraksi/blob/master/plm-bert-ekstraksi-adv.ipynb

Terimakasih kepada beberapa pihak yang membuat video ini terealisasi: Video direkam dengan: Open Broadcaster Software Video diedit dengan: DaVinci Thumbnail dibuat dengan: Inkscape Gambar diunduh dari: Pixabay

Terimakasih sudah mampir di video ini, like kalau kalian suka, subscribe dan bunyikan lonceng jika kalian ingin berlangganan, sampaikan masukan anda pada kolom komentar. Sampai jumpa. Semoga bermanfaat.

Untuk Keperluan Bisnis Email : heriistantoo@gmail.com

Sun Nov 7 2021 19:26:48 UTC

Integración de la red neuronal transformer BERT para responder preguntas basadas en textos (español e inglés). Los modelos de BERT los serialicé (serialization) en Phyton, posteriormente los importé con LibTorch a C++. El tokenizador en C++ lo obtuve de la implementación de Kamalkraj (https://github.com/kamalkraj/BERT-NER/tree/dev/cpp-app).

Enlaces de interés:

Un excelente tutorial de redes tranformer: Parte 1: https://www.youtube.com/watch?v=dichIcUZfOw&t=1s Parte 2: https://www.youtube.com/watch?v=mMa2PmYJlCo Parte 3: https://www.youtube.com/watch?v=gJ9kaJsE78k

Artículo de las Redes transformer: https://arxiv.org/abs/1706.03762

Libro para entender BERT: Getting Started with Google BERT: Build and train state-of-the-art natural language processing models using BERT. Url: https://leer.amazon.com.mx/kp/embed?asin=B08LLDF377&preview=newtab&linkCode=kpe&ref_=cm_sw_r_kb_dp_Y92JR9RB4R589EFYKZQ6

Tutorial de BERT: https://neptune.ai/blog/how-to-code-bert-using-pytorch-tutorial

Artículo de BERT: https://arxiv.org/abs/1810.04805

Excelente tutorial de Deep Learning y Pytorch en español: https://www.youtube.com/watch?v=ftlqZwb33SE&list=PLWzLQn_hxe6ZlC9-YMt3nN0Eo-ZpOJuXd

Mon Jul 19 2021 10:57:45 UTC

BERT, or Bidirectional Encoder Representations from Transformers developed by Google, is a method of pre-training language representations that obtains state-of-the-art results on a wide array of Natural Language Processing (NLP) tasks.