Machine Learning (ML) has gained prominence in recent years as a technique to automate decision-making and prediction tasks by using statistical methods and advanced algorithms. In particular, General Pre-Trained Transformers (GPTs) are a type of ML model that have shown remarkable results in natural language processing (NLP) applications, by pre-training large-scale neural networks on vast amounts of text data from the web. These models have been able to generate human-like text, answer questions, summarize texts, and even carry out conversations, among other tasks. In this article, we will explore the basics of Machine Learning and its relationship with General Pre-Trained Transformers, and highlight some examples of their applications and challenges.

What is Machine Learning?

At a high level, Machine Learning is a subfield of Artificial Intelligence (AI) that involves training machines to learn from data, without being explicitly programmed. These “learning” algorithms build a model using labeled training data, which is then used to make predictions or decisions about new data. In other words, a machine learning algorithm can take in large amounts of data, learn important patterns and relationships, and use that knowledge to make predictions or decisions about new, unseen data. Sometimes, this is referred to as “learning from experience.” Machine learning algorithms can be applied to a wide range of tasks, including image recognition, natural language processing, autonomous vehicles, recommendation systems, fraud detection, and many more. Some of the key factors that have led to the recent surge in interest and adoption of machine learning include advances in computing power, the availability of large-scale datasets, and the development of efficient learning algorithms.

There are three main types of machine learning:

In supervised learning, labeled training data is used to teach the machine learning algorithm to make predictions or decisions based on new, unseen data. This is the most common type of machine learning and can be used in a wide range of applications such as image recognition and classification, natural language processing, and recommendation systems. Unsupervised learning, on the other hand, involves using unlabeled data to make sense of patterns and relationships in the data. This approach is often used for tasks such as clustering, anomaly detection, and dimensionality reduction. Reinforcement learning is a type of learning where an agent learns to interact with an environment by maximizing a reward signal. This often involves trial and error, where the agent learns from its mistakes and adjusts its behavior accordingly. Reinforcement learning is used in applications such as game-playing and robotics.

Applications of Machine Learning

Machine Learning has tremendous potential for a wide range of applications, from automating routine tasks to discovering novel insights and improving decision-making. Some of the key areas where machine learning is being applied are natural language processing, image and speech recognition, recommender systems, fraud detection, anomaly detection, and predictive maintenance. Another area where machine learning has shown great promise is in healthcare, including diagnosis, drug discovery, and personalized medicine. In addition, machine learning is being used in finance, transportation, energy, and many other industries. Overall, as the amount of data that businesses and organizations collect continues to grow, the potential applications of machine learning are only going to expand further.

Successful applications of machine learning

- Image recognition for identifying objects in photos or videos

- Natural language processing for chatbots and voice assistants

- Recommendation systems for personalized product or content recommendations

- Fraud detection in financial transactions

- Predictive maintenance for equipment or vehicles

- Medical diagnosis and treatment recommendations based on patient data

- Personalized marketing campaigns based on customer data

- Sentiment analysis for understanding customer opinions and feedback

- Autonomous driving and navigation for vehicles

- Speech recognition and language translation

- Credit risk assessment for lending decisions

- Energy demand forecasting for optimizing energy usage

- Supply chain optimization and inventory management

- Social media analytics for understanding trends and user behavior

- Video and audio content analysis for content moderation and compliance.

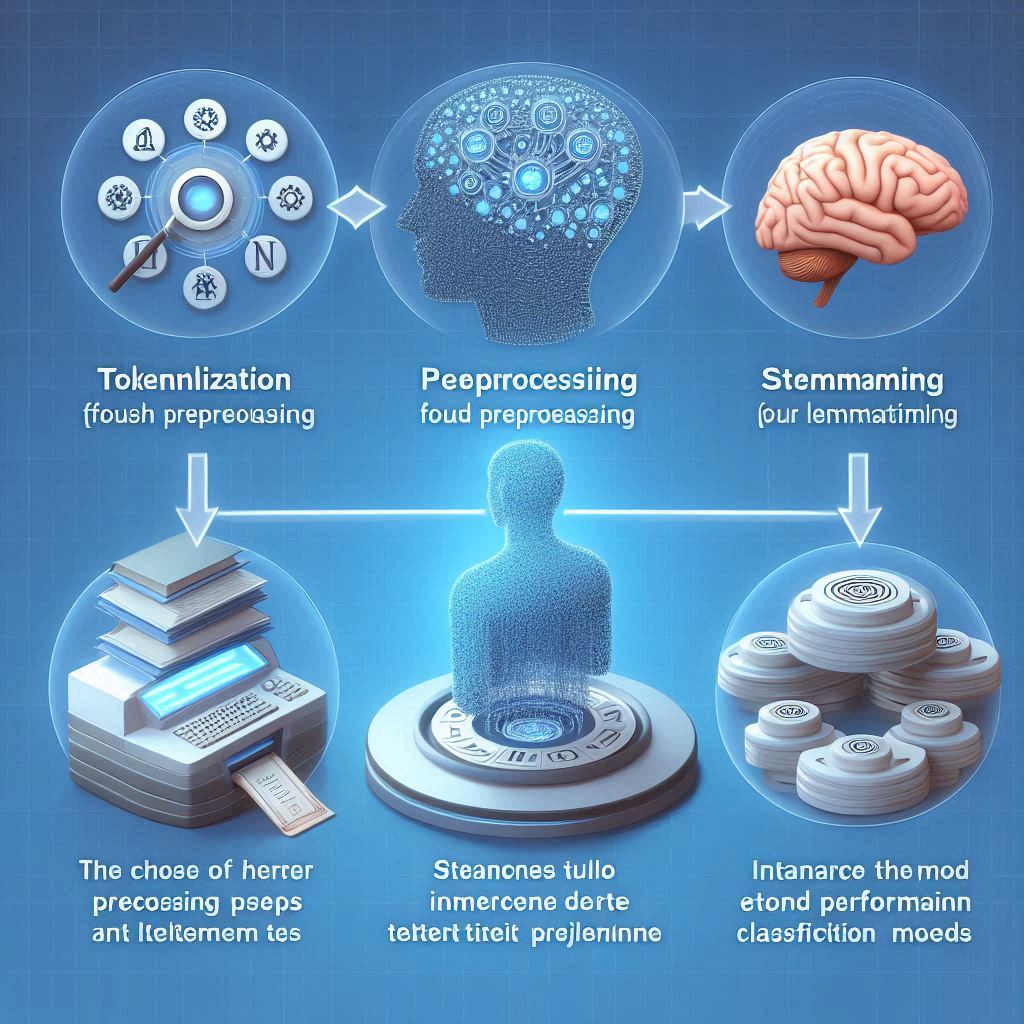

How Machine Learning Works

The goal of machine learning is to develop models that can accurately generalize from inputs that are not explicitly programmed, but rather learned from patterns in data. At its core, machine learning works by iteratively improving a model’s ability to make predictions or decisions based on labeled or unlabeled data. This is achieved through a process of training that involves feeding the algorithm large amounts of data and allowing it to identify patterns and relationships, which are then used to make informed predictions or decisions. As the algorithm is trained, it adjusts its parameters to minimize the difference between predicted outcomes and actual outcomes. Once the algorithm has been trained on enough data, it can be used to make predictions or decisions on new, unseen data.

Supervised Learning

Supervised learning is one of the most common types of machine learning, and it involves training an algorithm on a dataset that has labeled examples. Labeled examples refer to data where the desired output is already known. During the training phase, the algorithm learns to map input data to the correct output by finding patterns in the labeled examples. Once the algorithm has been trained on enough labeled data, it can be used to make predictions on new, unseen data. In supervised learning, the output variable is known and its relationship with the input variables is learned through the training process. Regression and classification are two common types of supervised learning tasks, where the goal is to predict a continuous output variable and a categorical output variable, respectively.

Unsupervised Learning

Unsupervised learning is another type of machine learning that involves training an algorithm on a dataset that has no labeled examples. The goal of unsupervised learning is to identify patterns or groupings within the data, without an explicit output or target variable. The goal is to discover natural groupings or distributions in the data, without any preconceived notion of what those patterns might be. Clustering and anomaly detection are two common types of unsupervised learning tasks that can be used to find groupings within the data, or to identify outliers that do not fit into any of the discovered patterns. Unsupervised learning is often used in cases where there is a lack of labeled data or where the true output is unknown, and can be a useful way to explore and understand patterns in large datasets.

Deep Learning

Deep learning is a type of machine learning that is inspired by the structure and function of the human brain. It involves developing artificial neural networks that can learn to identify patterns and make decisions based on data. Unlike traditional machine learning algorithms that work with relatively small datasets and a limited number of features, deep learning is capable of working with much larger and more complex datasets. Deep learning networks can learn from raw data, such as images or audio, which makes them well-suited for tasks like image recognition, speech recognition, language translation, and natural language processing. The training of deep learning networks requires a large amount of labeled data and significant computational resources, but the resulting models can achieve high levels of accuracy and are capable of making complex predictions or decisions.

Challenges and Limitations of Machine Learning

One major ethical challenge of machine learning is the problem of algorithmic bias. Machine learning models rely on training data to learn patterns and make decisions, and if that data is biased, the resulting models will also be biased. Biases can be introduced by factors like historical discrimination, skewed data samples, or even the personal biases of those creating or selecting the training data. These biases can lead to unfair or discriminatory outcomes, particularly in areas like hiring, lending, and criminal justice. Addressing algorithmic bias will require both technical solutions, such as better algorithms and more diverse training data, as well as ethical frameworks and oversight to ensure that the use of machine learning is fair and transparent.

In addition to ethical challenges, there are also technical limitations to consider when using machine learning. One key limitation is the need for high-quality, annotated data. Machine learning requires substantial amounts of labeled data for training, which can be time-consuming and costly to obtain. Additionally, machine learning models can struggle with cases that are not well-represented in the training data or that deviate significantly from the patterns seen during training. This problem, known as overfitting, can result in poor generalization performance and inaccurate predictions. Addressing these technical challenges will require a combination of advances in data annotation and collection methods, as well as more sophisticated machine learning algorithms that are better able to handle cases that deviate from the norm.

Best Practices for Machine Learning

To ensure the reliability of machine learning models, it’s important to follow best practices for developing, testing, and deploying those models. One key best practice is to use version control to keep track of changes to the code and the data used for training and testing the model. This can help ensure that models are reproducible and allow for easy collaboration between team members. Additionally, it’s important to monitor and evaluate the performance of the model over time, both in terms of accuracy and efficiency. Regularly retraining the model with updated data can also help improve its performance and prevent it from becoming outdated. Finally, it’s important to take steps to ensure the security of the model and the data it processes, such as using secure communication protocols and access controls. By following these best practices, businesses can improve the reliability and effectiveness of their machine learning models.

To ensure successful implementation of machine learning

- Define the problem: Identify the business problem or use case that you want to solve with machine learning.

- Gather the data: Collect and prepare the data that is needed for training the machine learning models.

- Choose the algorithm: Select the right algorithm or combination of algorithms that are best suited for solving the problem at hand.

- Train the model: Train the machine learning model with the prepared data, tweaking the parameters as needed to achieve the best performance.

- Evaluate the model: Evaluate the performance of the trained model using testing data.

- Deploy the model: Integrate the trained model into the business workflow or application, ensuring that it is secure and reliable.

- Monitor the model: Monitor the performance of the model over time and retrain it as needed to keep it up-to-date and accurate.

A Closer Look At Machine Learning Algorithms

Machine learning algorithms are at the heart of many of the applications we use today, from image recognition and natural language processing to recommendation systems and fraud detection. Some of the most popular machine learning algorithms include decision trees, random forests, support vector machines, and neural networks. Decision trees are a simple and intuitive approach to classification and regression problems, while random forests combine the predictive power of multiple decision trees. Support vector machines are a powerful tool for binary classification problems, and neural networks are a versatile approach to a wide range of machine learning tasks, including image and speech recognition. Overall, there are many different machine learning algorithms to choose from, each with its own strengths and weaknesses, and selecting the right one for a given problem is an important part of success in the field of machine learning.

Random Forest

The Random Forest algorithm is an ensemble learning method that combines multiple decision trees to make more accurate predictions. The basic idea behind the algorithm is to build a large number of decision trees, each using a different subset of the available features and training data, and then take a majority vote of the predictions made by the individual trees. By introducing randomness in the way that the trees are constructed, Random Forest is able to overcome some of the limitations of traditional decision trees, such as overfitting to the training data. Overall, Random Forest is a powerful and widely used algorithm that is particularly effective for classification and regression problems in which there are many input features.

Logistic Regression

Sure! Logistic Regression is a commonly used statistical algorithm for predicting the outcome of a binary or categorical dependent variable based on one or more independent variables. The goal of logistic regression is to find an S-shaped curve, also known as the logistic curve, that best separates the two classes in the data. The algorithm works by first estimating the probabilities of the dependent variable belonging to each class based on the independent variables, and then determining the best decision boundary to classify new data points based on these probabilities. Logistic Regression is a simple yet powerful algorithm that is widely used in machine learning applications such as fraud detection, medical diagnosis, and credit scoring.

K-Nearest Neighbors

Sure! The k-nearest neighbors algorithm, or kNN for short, is a simple yet powerful algorithm used for classification and regression tasks. The idea behind kNN is to predict the class or value of a new data point by finding the k closest points in the training data and classifying or regressing based on the majority vote or average of their target values. The distance metric used can vary depending on the problem, but the most common is Euclidean distance. The choice of k can also affect the performance of the algorithm, with larger values of k leading to smoother decision boundaries but potentially poorer accuracy near the edges of the classes. The kNN algorithm is easy to understand and implement, making it a popular choice for beginners in machine learning.

Tools and Frameworks for Machine Learning

The field of machine learning has seen a proliferation of powerful and user-friendly tools and frameworks in recent years, making it easier than ever for developers and data scientists to build and deploy machine learning models. Some of the most popular machine learning tools and frameworks include TensorFlow, PyTorch, scikit-learn, Keras, and Apache Spark. These tools offer a wide range of functionality, from basic regression and classification tasks to more advanced deep learning models and neural networks. Additionally, many cloud providers offer their own machine learning platforms, such as Amazon SageMaker, Google Cloud AI Platform, and Microsoft Azure Machine Learning. With so many powerful and easy-to-use tools and frameworks available, the barrier to entry for developing and deploying machine learning models has never been lower.

Some of the most popular machine learning tools and frameworks:

- TensorFlow: Developed by Google, TensorFlow is a powerful open-source platform for building and training machine learning models. It offers a range of tools and resources for both beginners and experts in the field.

- PyTorch: Developed by Facebook, PyTorch is a Python-based machine learning framework that is particularly popular for building and training deep learning models.

- scikit-learn: Scikit-learn is a comprehensive machine learning toolkit that includes a range of algorithms and tools for data preparation, feature engineering, model selection, and evaluation.

- Keras: Built on top of TensorFlow, Keras is a high-level neural network API that makes it easy for developers to build and train deep learning models.

- Apache Spark: Apache Spark is a popular framework for distributed computing that includes tools for machine learning and data processing. It integrates with a range of other machine learning tools and frameworks, making it a popular choice for scalable machine learning applications.

These are just a few examples of the many machine learning tools and frameworks available to developers and data scientists today. Each has its own unique strengths and weaknesses, and the choice of tool or framework will depend on the specific needs of the project at hand.

Machine learning is a rapidly growing field and there are a number of popular tools and frameworks available for developers and data scientists. TensorFlow is one of the most widely used machine learning platforms and offers a range of tools and resources for building and training models. It is supported by a large and active community, but can be complex to use for beginners and requires significant computational resources for larger models. PyTorch, on the other hand, is known for its ease of use and flexibility, and offers good speed and performance. It supports a range of neural network architectures and optimization techniques, but has a smaller community and fewer built-in tools and algorithms than TensorFlow. scikit-learn is a user-friendly toolkit that is easy to use for beginners and offers a range of built-in algorithms and tools for classification, regression, clustering, and more. However, it has limited support for deep learning models and can be slower than some other frameworks for larger datasets.

Keras is a high-level neural network API that makes it easy to build and train deep learning models. It can handle a range of neural network architectures and optimization techniques and integrates well with TensorFlow, but has fewer built-in tools and algorithms than some other frameworks and is slower for larger models. Finally, Apache Spark is designed for distributed computing, making it a popular choice for large-scale, real-time data processing. It comes with built-in support for machine learning libraries and can integrate well with Hadoop and other big data technologies, but requires a Hadoop cluster or other distributed computing infrastructure, has a steep learning curve for beginners, and may not be as suitable for smaller datasets. Ultimately, the choice of tool or framework will depend on the specific needs of the project at hand.

Future of Machine Learning

Machine learning has grown rapidly in recent years, and its future is poised to be equally as exciting. Advancements in machine learning algorithms and hardware are enabling machines to make predictions with increasingly greater accuracy, and to automate a wide range of tasks that were once the exclusive domain of human intelligence. Experts predict that in the near future, machine learning will continue to transform a number of industries, including healthcare, finance, transportation, and manufacturing, among others. As these advancements continue, there is likely to be an increasing demand for skilled machine learning professionals who can design, develop, and implement machine learning solutions to solve complex problems.

In addition, we can expect to see machine learning become more readily accessible to a wider range of users. This is due to the emergence of platforms such as AutoML and other tools that automate much of the machine learning process, making it more accessible to developers and data scientists who may not have a deep expertise in machine learning. As these tools continue to evolve and mature, we can expect to see an increasing number of organizations leveraging machine learning to drive innovation, boost efficiency, and create new business opportunities, all of which will be central to the future of machine learning.

Conclusion

Machine learning has emerged as an invaluable tool for businesses looking to optimize their operations, gain insights into customer behavior, and unlock new opportunities for growth and innovation. While implementing machine learning effectively requires careful evaluation and significant expertise, making smart investments in this technology can position businesses for long-term success in a rapidly evolving digital landscape. By leveraging machine learning to improve their products and services and stay ahead of the competition, businesses can gain a competitive advantage and drive innovation across a range of industries.