GPT-3 and Language Processing

The field of natural language processing (NLP) has seen significant advancements in recent years, thanks to the development of powerful machine learning models. One such model that has generated a lot of buzz in the NLP community is GPT-3 (Generative Pre-trained Transformer 3), developed by OpenAI. GPT-3 is a language model that can generate human-like text, making it a revolutionary tool for language processing.

In this article, we will explore what GPT-3 is, how it works, and its impact on natural language processing. We will also discuss its potential for language translation, its application in chatbots and customer service, and its role in content creation and copywriting. (Spoiler Alert: 100% of this article was written by the davinci-003 model from OpenAI using a proprietary series of prompts.) Finally, we will examine the limitations and ethical concerns surrounding GPT-3 and its future in language processing.

What is GPT-3 and How Does it Work?

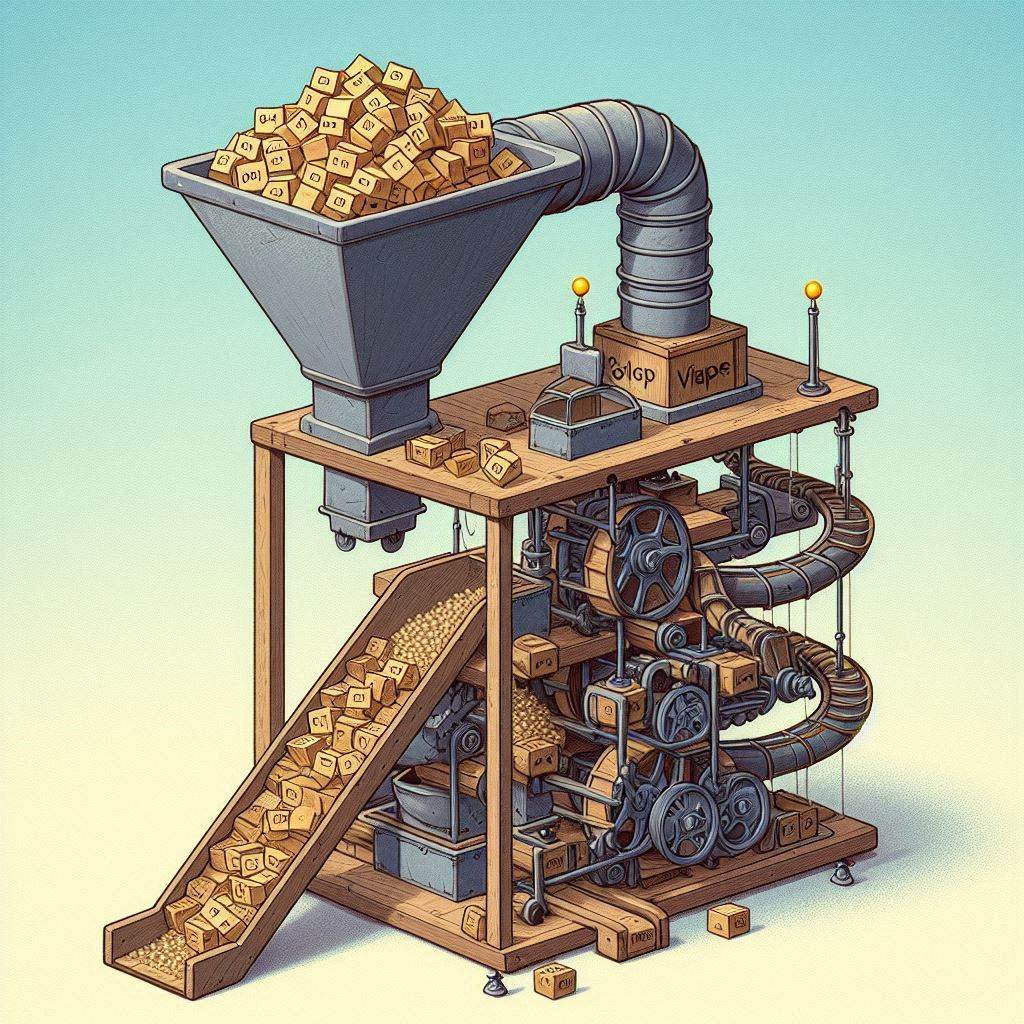

GPT-3 is a deep learning model that uses a transformer architecture to generate human-like text. It was trained on a massive dataset of text from the internet, making it capable of generating text in a wide range of styles and topics. GPT-3 is pre-trained on a large corpus of text, which means that it has already learned the underlying patterns of language before being fine-tuned for specific tasks.

GPT-3 works by predicting the next word in a sentence based on the previous words. It uses a technique called unsupervised learning, meaning that it learns from the data without being explicitly told what to do. This allows GPT-3 to generate text that is similar to human writing and can even mimic the style of a particular author or genre.

GPT-3’s Revolutionary Language Capabilities

GPT-3’s language capabilities are truly revolutionary. It can generate text that is indistinguishable from human writing, making it a powerful tool for content creation, copywriting, and even journalism. GPT-3 can also understand and generate text in multiple languages, making it a valuable tool for language translation.

In addition to its language generation capabilities, GPT-3 can also perform a wide range of language tasks, including question answering, summarization, and sentiment analysis. It can even write computer code and generate images from text descriptions.

The Impact of GPT-3 on Natural Language Processing

GPT-3 has had a significant impact on the field of natural language processing. Its language generation capabilities have opened up new possibilities for content creation, copywriting, and journalism. It has also made language translation more accessible and accurate.

GPT-3 has also made it easier to build chatbots and customer service applications. Its ability to generate human-like responses makes it a valuable tool for creating chatbots that can interact with customers in a natural and engaging way.

GPT-3’s Ability to Understand Context and Meaning

One of the most impressive aspects of GPT-3 is its ability to understand context and meaning. It can generate text that is not only grammatically correct but also semantically meaningful. This means that it can take into account the context of a sentence and generate text that is appropriate for that context.

For example, if given the sentence “I went to the bank,” GPT-3 can generate text that is appropriate for either a financial institution or a riverbank based on the context of the sentence.

GPT-3’s Potential for Language Translation

GPT-3’s language capabilities make it a valuable tool for language translation. It can understand and generate text in multiple languages, making it easier to translate content from one language to another. GPT-3 can also generate text that is appropriate for the cultural context of the language, making it a more accurate tool for language translation.

GPT-3’s Application in Chatbots and Customer Service

GPT-3’s ability to generate human-like responses makes it a valuable tool for chatbots and customer service applications. It can interact with customers in a natural and engaging way, providing a more personalized experience. GPT-3 can also understand and respond to customer queries, making it a valuable tool for customer service applications.

GPT-3’s Role in Content Creation and Copywriting

GPT-3’s language generation capabilities make it a powerful tool for content creation and copywriting. It can generate text that is indistinguishable from human writing, making it a valuable tool for creating blog posts, articles, and even books. GPT-3 can also generate text in a wide range of styles and genres, making it a versatile tool for content creation.

GPT-3’s Limitations and Ethical Concerns

While GPT-3 has impressive language capabilities, it also has limitations and ethical concerns. One of the biggest concerns is the potential for GPT-3 to be used for malicious purposes, such as generating fake news or impersonating individuals online. There are also concerns about the bias inherent in the data used to train GPT-3, which could lead to biased language generation.

The Future of Language Processing with GPT-3

The future of language processing with GPT-3 is exciting. As the model continues to be fine-tuned and improved, it will become an even more powerful tool for language processing. GPT-3’s language generation capabilities will continue to revolutionize content creation, copywriting, and journalism, while its ability to understand context and meaning will make it a valuable tool for language translation and customer service applications.

Conclusion: GPT-3’s Potential for Language Revolution

GPT-3 is a revolutionary tool for language processing. Its language generation capabilities have opened up new possibilities for content creation, copywriting, and journalism, while its ability to understand context and meaning makes it a valuable tool for language translation and customer service applications. While there are limitations and ethical concerns surrounding GPT-3, its potential for language revolution is undeniable.

References and Further Reading

- Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., … & Amodei, D. (2020). Language models are few-shot learners. arXiv preprint arXiv:2005.14165.

- Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI Blog, 1(8), 9.

- OpenAI. (n.d.). GPT-3. Retrieved from https://openai.com/blog/gpt-3-applications/.

One thought on “Revolutionizing Language: GPT-3’s Impact on Natural Language Processing”