To deepen your understanding of subword tokenization and its applications in Natural Language Processing (NLP), here are several recommended resources and tutorials:

- GeeksforGeeks – Subword Tokenization in NLP: This article provides a comprehensive overview of subword tokenization, explaining why it’s beneficial for handling large vocabularies and complex word structures. It includes practical examples and discusses Byte-Pair Encoding (BPE), a popular subword tokenization approach Source 0.

- TensorFlow Text Guide – Subword Tokenizers: This guide demonstrates how to generate a subword vocabulary from a dataset and use it to build a

text.BertTokenizerfrom the vocabulary. It highlights the advantages of subword tokenization, such as its ability to interpolate between word-based and character-based tokenization Source 1. - Octanove Blog – Complete Guide to Subword Tokenization Methods in the Neural Era: Authored by Masato Hagiwara, this blog post covers several subword tokenization techniques, including WordPiece, byte-pair encoding (BPE), and SentencePiece. It explains the differences between these methods and their implications for designing NLP models Source 2.

- Hugging Face NLP Course: This course chapter on subword tokenization explains the principle behind subword tokenization and provides examples of how it works. It also introduces various subword tokenization techniques used in different models, such as Byte-level BPE in GPT-2 and WordPiece in BERT Source 3.

- Neptune.ai Blog – Tokenization in NLP: This article discusses the importance of tokenization in NLP projects and explores different types of tokenization, including subword tokenization. It also mentions various tools and libraries available for tokenization, such as NLTK, TextBlob, spaCy, Gensim, and Keras Source 4.

By exploring these resources, you’ll gain a solid understanding of subword tokenization, its significance in NLP, and how to implement it effectively in your projects.

Further reading ...

- https://www.geeksforgeeks.org/subword-tokenization-in-nlp/

- https://www.tensorflow.org/text/guide/subwords_tokenizer

- https://blog.octanove.org/guide-to-subword-tokenization/

- https://huggingface.co/learn/nlp-course/en/chapter2/4?fw=pt

- https://neptune.ai/blog/tokenization-in-nlp

- https://towardsdatascience.com/word-subword-and-character-based-tokenization-know-the-difference-ea0976b64e17

- https://wandb.ai/mostafaibrahim17/ml-articles/reports/An-introduction-to-tokenization-in-natural-language-processing–Vmlldzo3NTM4MzE5

- https://towardsdatascience.com/a-comprehensive-guide-to-subword-tokenisers-4bbd3bad9a7c [9] https://www.geeksforgeeks.org/nlp-how-tokenizing-text-sentence-words-works/

Real-world Application of Subword Tokenization in Natural Language Processing

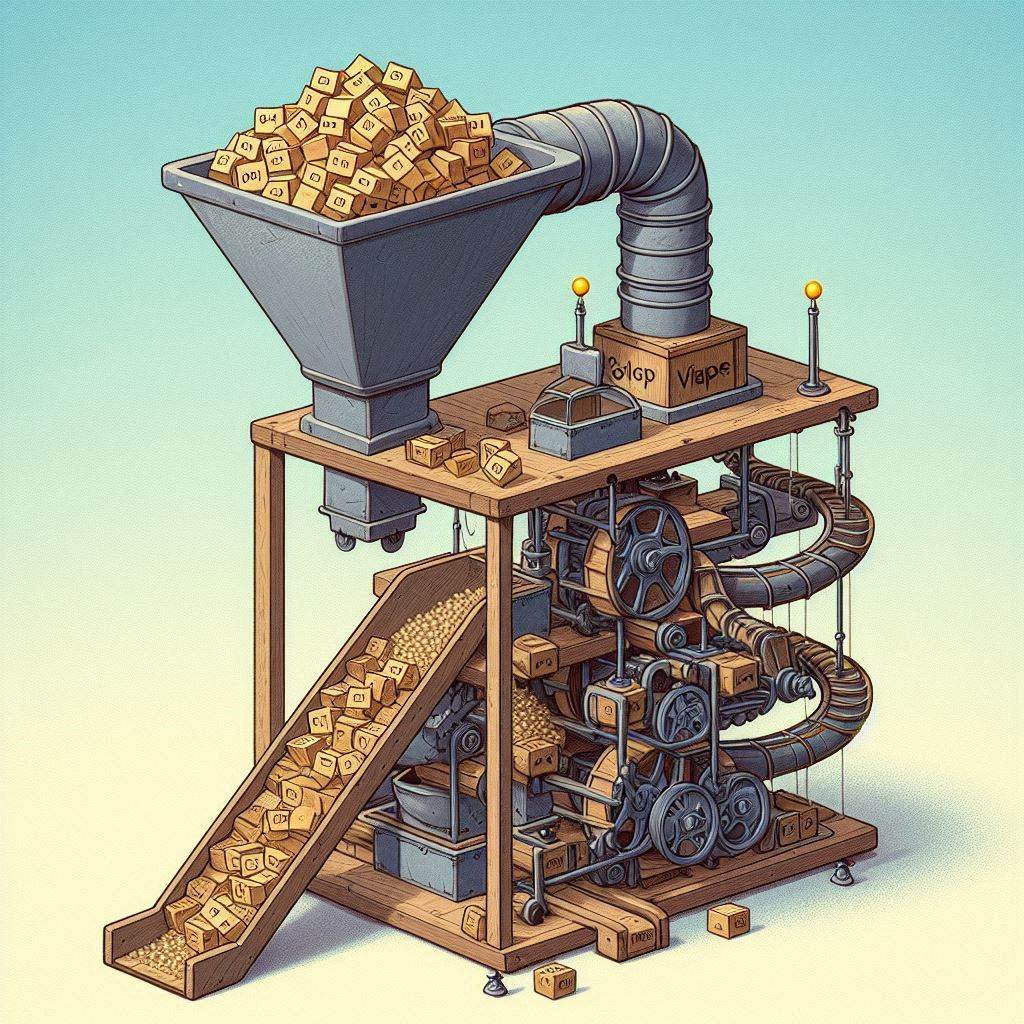

A real-world application of subword tokenization in Natural Language Processing (NLP) can be seen in the development of a rating classifier project described in Source 3. This project aimed to classify user reviews based on their ratings, utilizing a deep-learning text classification model. The process involved several key steps where subword tokenization played a crucial role:

- Text Cleaning and Tokenization: Initially, the text data from user reviews was cleaned using the

word_tokenizefunction from the Natural Language Toolkit (NLTK). This step removed irrelevant information such as stop words and punctuation, preparing the text for further processing. - Preprocessing with Keras Tokenizer: After cleaning, the text was preprocessed using the

Tokenizerclass from Keras. This step transformed the cleaned text into a list of tokens, which were then converted into sequences of integers. This conversion is essential for feeding text data into a neural network model. - Sequence Padding: To ensure that all sequences had the same length, padding was added to shorter sequences. This uniformity is necessary for batch processing during model training.

- Model Training and Evaluation: The preprocessed and padded sequences were then used to train a Bidirectional Long Short-Term Memory (BiLSTM) model. The model was trained on a subset of the data and evaluated on a separate testing set to assess its performance.

In this project, subword tokenization facilitated the transformation of raw text data into a structured format suitable for deep learning models. By converting text into sequences of integers, the model could effectively learn patterns and relationships within the data, leading to accurate classification of user reviews based on their ratings.

This example illustrates the practical application of subword tokenization in NLP projects, highlighting its importance in preprocessing text data for machine learning models.

Further reading ...

- https://www.geeksforgeeks.org/subword-tokenization-in-nlp/

- https://neptune.ai/blog/tokenization-in-nlp

- https://towardsdatascience.com/wordpiece-subword-based-tokenization-algorithm-1fbd14394ed7

- https://www.datacamp.com/blog/what-is-tokenization

- https://medium.com/@lars.chr.wiik/how-modern-tokenization-works-d56013a78f1e

- https://www.analyticsvidhya.com/blog/2020/05/what-is-tokenization-nlp/

- https://wandb.ai/mostafaibrahim17/ml-articles/reports/An-introduction-to-tokenization-in-natural-language-processing–Vmlldzo3NTM4MzE5

- https://www.tensorflow.org/text/guide/subwords_tokenizer [9] https://medium.com/@akp83540/word-piece-tokenization-algorithm-dd86de322b00

Tokenization Helps Models Identify Structure

The choice of tokenizer significantly impacts the overall performance of a deep learning model in text classification tasks. Here’s how:

- Efficiency in Handling Variations: Tokenization helps models identify the underlying structure of text, enabling them to process it more efficiently. Different tokenization methods can handle variations in language and words with multiple meanings or forms more effectively, depending on the task and language being processed [2].

- Compression Ability and Model Performance: Research indicates that a tokenizer’s compression ability, influenced by the amount of supporting data it was trained on, affects model performance. Tokenizers trained on more data tend to produce shorter tokenized texts, which generally leads to better performance in downstream tasks. This effect is more pronounced for less frequent words and in generative tasks compared to classification tasks [4].

- Impact on Smaller Models: Smaller models are particularly sensitive to the quality of tokenization. Poor tokenization can lead to significant drops in performance for models with fewer parameters. This suggests that the choice of tokenizer is crucial for optimizing the performance of smaller models [4].

- Correlation Between Tokenizer Support and Model Success: There is a strong correlation between the level of support a tokenizer receives (in terms of training data) and the model’s success in downstream tasks. Better-supported tokenizers tend to result in improved model performance, although this relationship is not strictly linear. The impact of tokenizer support is more evident in generation tasks than in classification tasks [4].

- Consistency Across Languages: The effects of tokenizer choice and support on model performance are consistent across different languages, not just English. This underscores the universal importance of selecting an appropriate tokenizer for NLP tasks [4].

In summary, the choice of tokenizer plays a critical role in the performance of deep learning models in text classification tasks. Factors such as the tokenizer’s compression ability, the amount of supporting data it was trained on, and its suitability for the specific task and language all contribute to the model’s efficiency and accuracy.

Further reading ...

- https://trec.nist.gov/pubs/trec29/papers/UAmsterdam.DL.pdf

- https://medium.com/@lokaregns/preparing-text-data-for-transformers-tokenization-mapping-and-padding-9fbfbce28028

- https://medium.com/@mcraddock/introduction-to-tokenizers-in-large-language-models-llms-using-wardley-maps-652ee4dd6227

- https://arxiv.org/html/2403.06265v1

- https://arxiv.org/pdf/2403.00417

- https://www.tandfonline.com/doi/full/10.1080/08839514.2023.2175112

- https://neptune.ai/blog/tokenization-in-nlp

- https://arxiv.org/pdf/2402.01035

- https://deepgram.com/ai-glossary/tokenization

- https://link.springer.com/article/10.1007/s11063-022-10990-8

- https://saschametzger.com/blog/what-are-tokens-vectors-and-embeddings-how-do-you-create-them

- https://huggingface.co/docs/transformers/v4.19.3/en/tasks/sequence_classification

- https://huggingface.co/learn/nlp-course/chapter2/4

- https://towardsdatascience.com/tokenization-for-natural-language-processing-a179a891bad4 [15] https://analyticsindiamag.com/tutorial-on-keras-tokenizer-for-text-classification-in-nlp/

Stemming, and Lemmatization Are Fundamental Preprocessing Steps

Tokenization, stemming, and lemmatization are fundamental preprocessing steps in Natural Language Processing (NLP) that interact in various ways to prepare text data for analysis and modeling. Understanding how these steps work together is crucial for effective text classification models.

Tokenization

Tokenization is the initial step where text is split into individual words or tokens. This process is essential because it transforms unstructured text into a structured format that can be analyzed computationally. Tokenization can be simple, splitting text into words, or more complex, breaking down words into subwords or characters, depending on the needs of the subsequent analysis or modeling steps.

Stemming vs. Lemmatization

Both stemming and lemmatization are techniques used to reduce words to their root or base form, but they differ in their approaches and outcomes:

- Stemming is a mechanical process that chops off the ends of words to produce a “stem” or root form. It operates on a single word without considering its context or whether the resulting stem actually exists as a valid word in the language. This method is fast but can sometimes produce incorrect stems that do not correspond to actual words.

- Lemmatization, on the other hand, aims to reduce words to their base or dictionary form, known as the lemma. Unlike stemming, lemmatization takes into account the morphological analysis of words and uses linguistic rules and a lexicon (like WordNet) to determine the correct base form. This makes lemmatization more accurate but also slower than stemming.

Interaction with Tokenization

After tokenization, stemming or lemmatization can be applied to each token to normalize the text. Normalizing text is important because it helps to reduce the variability in word forms, which can improve the performance of text classification models. For example, different forms of the same verb (e.g., “run”, “runs”, “running”) can be reduced to a common base form (“run”), allowing the model to treat them as the same word during analysis.

Impact on Text Classification Models

The choice between stemming and lemmatization, and the order in which these preprocessing steps are applied relative to tokenization, can affect the performance of text classification models. Models that rely heavily on semantic meaning might benefit more from lemmatization, as it ensures that words are normalized to their correct base forms. Conversely, models that focus more on syntactic patterns might find stemming sufficient, given its simplicity and speed.

In practice, the effectiveness of these preprocessing steps can depend on the specific characteristics of the text data and the goals of the text classification task. Experimentation and evaluation are often required to determine the optimal combination of tokenization, stemming, and lemmatization for a given project.

In summary, tokenization sets the stage for further preprocessing through stemming or lemmatization, which are crucial for normalizing text and reducing its complexity to enhance the performance of text classification models. The choice of preprocessing steps and their sequence can significantly impact the outcome of NLP projects.

Further reading ...

- https://keremkargin.medium.com/nlp-tokenization-stemming-lemmatization-and-part-of-speech-tagging-9088ac068768

- https://www.geeksforgeeks.org/introduction-to-nltk-tokenization-stemming-lemmatization-pos-tagging/

- https://medium.com/@jeevanchavan143/nlp-tokenization-stemming-lemmatization-bag-of-words-tf-idf-pos-7650f83c60be

- https://www.nlplanet.org/course-practical-nlp/01-intro-to-nlp/05-tokenization-stemming-lemmatization

- https://towardsdatascience.com/text-preprocessing-with-nltk-9de5de891658

- https://www.educative.io/answers/difference-between-tokenization-and-lemmatization-in-nlp

- https://www.tutorialspoint.com/what-is-tokenization-and-lemmatization-in-nlp

- https://www.spydra.app/blog/tokenization-in-nlp-natural-language-processing-an-essential-preprocessing-technique

- https://towardsdatascience.com/tokenization-for-natural-language-processing-a179a891bad4 [10] https://www.datacamp.com/tutorial/text-classification-python