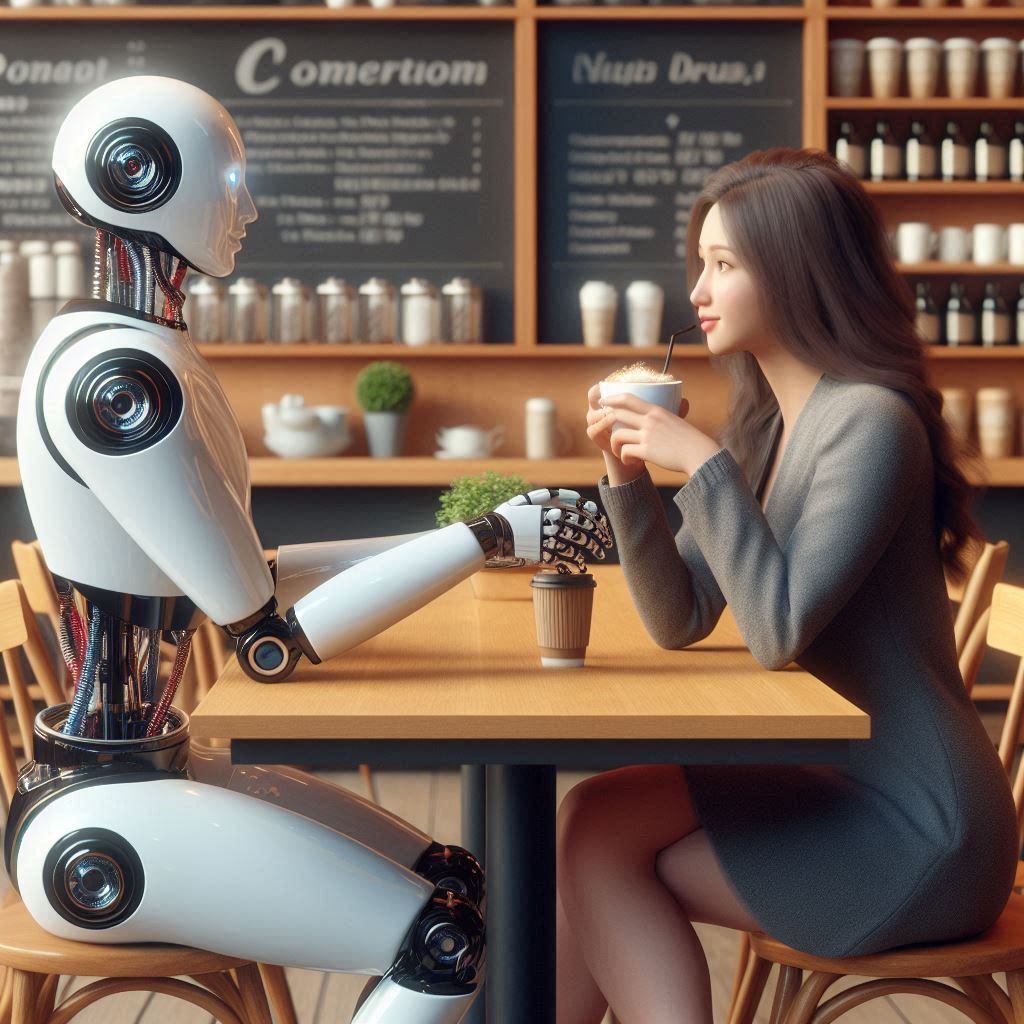

What Effect Does Telling an LLM to Take on a Particular Role Have on The Response?

Telling a Large Language Model (LLM) to take on a particular role or expertise significantly influences the nature and quality of the responses generated by the model. This approach is part of a broader strategy known as “prompt engineering,” which involves structuring the input to the LLM in a way that guides its output towards…