Advanced Techniques for Image Generation with Microsoft Copilot Designer

Understanding AI Image Prompts At the heart of generating images with Microsoft Copilot Designer lies…

Understanding AI Image Prompts At the heart of generating images with Microsoft Copilot Designer lies the concept of AI image prompts. These are textual instructions you provide to the AI, guiding it to create specific images. A well-crafted prompt can range from a simple phrase to a detailed paragraph, with at least six words recommended…

Telling a Large Language Model (LLM) to take on a particular role or expertise significantly influences the nature and quality of the responses generated by the model. This approach is part of a broader strategy known as “prompt engineering,” which involves structuring the input to the LLM in a way that guides its output towards…

Most of the mentioned LLMs, including Falcon, GPT-4, Llama 2, Cohere, and Claude 3, can be integrated into existing systems, albeit with varying degrees of ease and resource requirements. Falcon stands out for its versatility and accessibility, even on consumer hardware, making it a strong candidate for projects with limited resources. GPT-4, assuming similar capabilities to GPT-3, would require substantial computational resources for integration. Llama 2’s efficiency and customization options make it appealing for projects needing a balance between cost and performance.

In the rapidly evolving landscape of technology, artificial intelligence (AI) has emerged as a transformative force across various sectors, including healthcare, finance, and entertainment. Among the myriad applications of AI, one area stands out for its potential to redefine creativity: the generation of artistic and narrative content. Central to this creative revolution are AI design prompts, which serve as the bridge between human intent and AI capability, guiding these systems to create works that are both innovative and aesthetically pleasing.

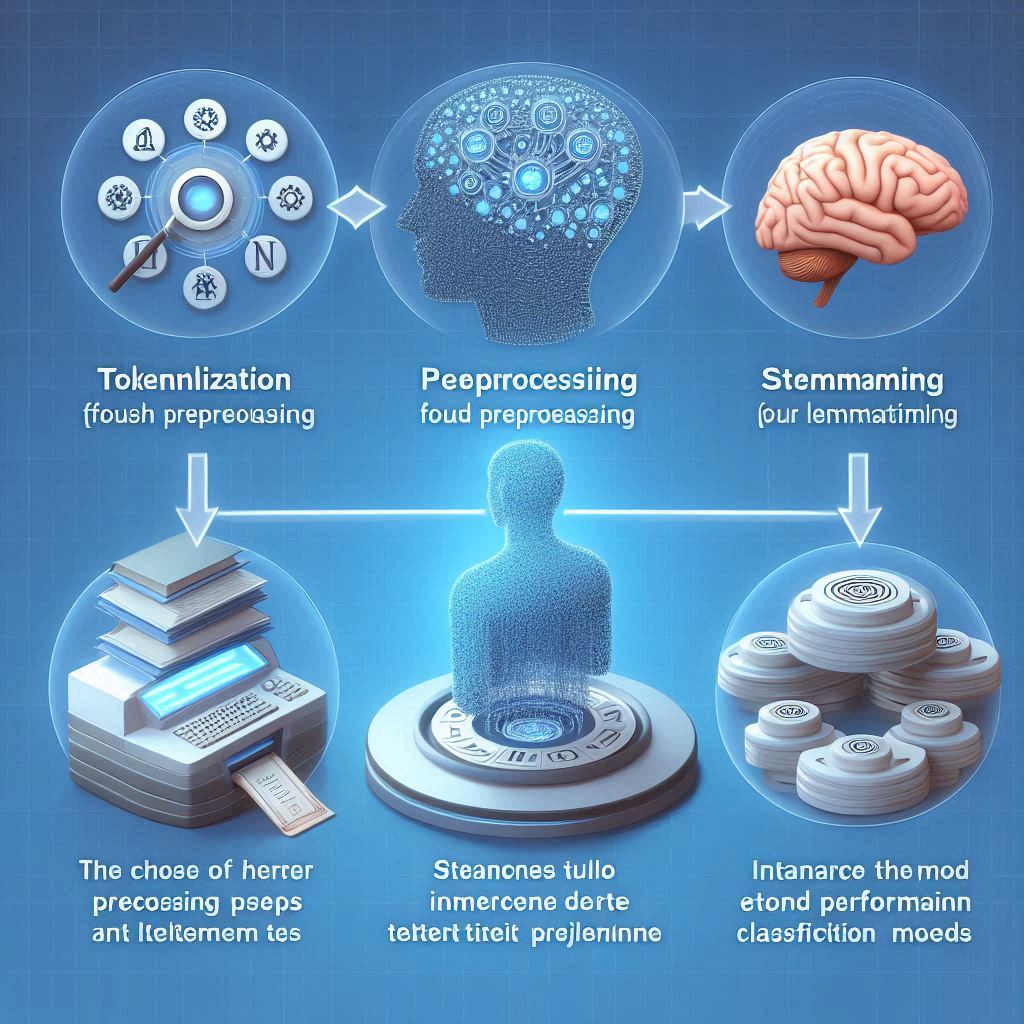

To deepen your understanding of subword tokenization and its applications in Natural Language Processing (NLP), here are several recommended resources and tutorials: By exploring these resources, you’ll gain a solid understanding of subword tokenization, its significance in NLP, and how to implement it effectively in your projects. Further reading … [1] https://www.geeksforgeeks.org/subword-tokenization-in-nlp/[2] https://www.tensorflow.org/text/guide/subwords_tokenizer[3] https://blog.octanove.org/guide-to-subword-tokenization/[4] https://huggingface.co/learn/nlp-course/en/chapter2/4?fw=pt[5]…

These milestones reflect the continuous evolution of Artificial Intelligence, from theoretical concepts to practical applications that significantly impact various aspects of society and the economy.

Click here now to find out how you can effectively integrate JSON Schema validation into your content generation tool, enhancing data integrity and consistency across your application

Extracting keywords from text is essential for understanding the main topics discussed in an article. This technique helps in identifying key terms that define the subject matter, which can be used for SEO optimization, content tagging, and summarization purposes. Keyword extraction is a core component of many NLP applications, including information retrieval and content recommendation systems

there are several easy-to-use, free APIs for generating images using AI that you could integrate into a WordPress plugin. Two notable options are the AI Image Generator plugin for WordPress and Imajinn AI

Crafting effective prompts is akin to striking the perfect chord on a piano—it requires precision, timing, and a deep understanding of the instrument itself. When interacting with AI models like ChatGPT, the quality of the output hinges heavily on the quality of the input: the prompt. A well-crafted prompt serves as the blueprint for the AI’s response, guiding it towards generating accurate, relevant, and insightful content.

Context windows in large language models (LLMs) play a pivotal role in enhancing the performance and efficiency of these models. By defining the amount of text a model can consider when generating responses, context windows directly influence the model’s ability to produce coherent and contextually relevant outputs. Here’s how context windows contribute to the overall performance and efficiency of language models:

Integrating AI to analyze and generate recommendations in WordPress can enhance user experience. Learn how to choose, integrate, configure, and optimize AI tools for personalized content suggestions.